What happens when an artificial intelligence is right, but we don’t know why? One of the latest trends in artificial intelligence presents us with exactly this question. It’s called deep learning, and it might be coming to a device near you. Assuming, of course, that it hasn’t already. In my post, I will explore deep learning and its ethical implications and suggest that rhetoric offers one theoretical framework for critiquing deep learning and designing ethical systems.

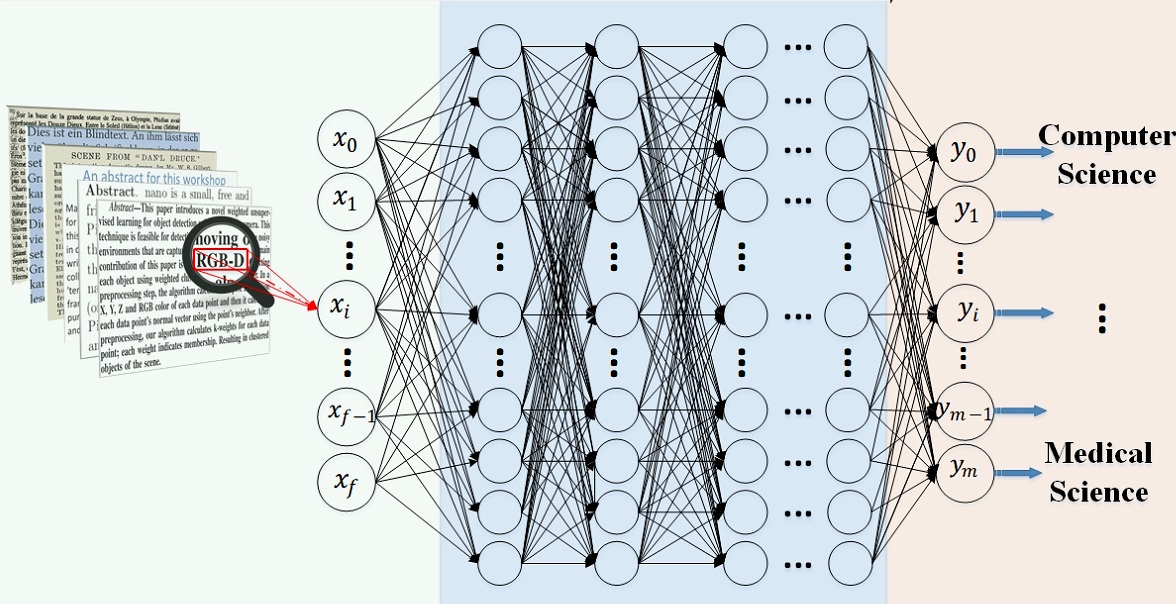

Deep learning is related to artificial intelligence and more specifically machine learning, but it is not the same thing. Deep learning refers specifically to a type of machine learning that implements digital structures similar to those of neurological systems and uses these neural networks to process information. Deep learning is deep because it unfolds over many cascading layers, the output from one layer becoming the input for the next. A Creative Commons image from Wikipedia illustrates this process in the context of a deep learning text classification system that takes scholarly articles as inputs and outputs subject classifications. The blue shaded region in the middle contains the “hidden” layers of the network, which can become quite complex.

While deep learning is not a new theory, we are only now at the point where computers are powerful enough to implement deep learning algorithms capable of handling complex tasks. The advent of cloud computing, which allows smaller consumer devices access to large amounts of data and computational power, has also helped to make these technologies more accessible and increasingly ubiquitous. The tasks where deep learning has shown promise run quite a gamut, from image and art identification and natural language processing to medical diagnostics and even driving. Some of these applications are still in their infancy, but other deep learning algorithms outperform their human counterparts.

This will probably come as no surprise to anyone who has seen a science fiction film, but there is a downside (or two) to this new advance in artificial intelligence. Some are similar to what we already see in more traditional statistics-based machine learning. For instance, Cathy O’Neil has explored how building algorithmic models from existing data often serves to reify biases already existing in those data. “Many poisonous assumptions are camouflaged by math and go largely untested and unquestioned,” O’Neil writes, and deep learning threatens to further this process when applied uncritically and when deep learning algorithms are unsupervised, which refers to any machine learning that is trained on datasets which have not been initially classified by humans. While O’Neil does not focus specifically on deep learning, it raises its own set of problems, chief among them its deep reticence.

In an article in the MIT Technology Review titled “The Dark Secret at the Heart of AI,” Will Knight describes the shift from complicated yet logically-branching AI systems to deep learning as a movement toward, “complex machine-learning approaches that could make automated decision-making altogether inscrutable.” And it’s not just that current implementations of deep learning are hard to explain—it’s that they may not be possible to explain, even by the engineers working on deep learning projects, because these programs essentially create themselves as they learn.

There are many reasons why the development of deep learning is relevant to the theory and practice of rhetoric. But I’d like to focus on two. First, we need to develop rhetorics such that we can account for this particular class of artificially intelligent actors. Right now, we primarily account for these machines by comparing the results we do see to outcomes we can operationalize, such as the prediction of disease. But this leaves untouched the deep neurological network that underpins the machine’s output, which is but one surface of the machine’s existence.

This reminds me of Timothy Morton’s hyperobject, which is an object that is so broadly distributed over space and time that humans are ill-equipped to effectively comprehend its existence and its implications. As an ecological theorist, Morton’s recurring example is global warming, but it’s easy to see a hyperobject lurking in the depths of deep learning. At any given time we have access only to a superficial slice of the deep learning algorithm, the readily observable outcome of a deep learning process, while the networks that produce those outcomes sprawl silently beneath the surface.

A rhetorical strategy that might allow us to understand deep learning AI as active agent could be to shift the object of analysis from artificial intelligence as a unit to what Jane Bennett describes as assemblages, or agentive collections of human and non-human actors. We can rhetorically reframe questions about deep learning away from its apparent predictive qualities and toward its location at a nexus of distributed actors and agencies, and in so doing replace deep learning within broader social, political, and ethical circumstances. The question then becomes not why does deep learning work or even how is it working, but what is it doing among other interactors.

Second, we need to explore machine rhetorics such that this particular class of artificially intelligent actors can account for us. Deep learning algorithms are bad at explaining themselves. Without some explanation, it becomes impossible for users to enter into relationships of trust with technology, as Asle H. Kiran and Peter-Paul Verbeek put it. We also run the risk of tautological spirals where the algorithm becomes its own backing—trust the algorithm because the algorithm is right. This opens the door to a broader logical tyranny where technological systems become unavailable as objects of criticism.

If we accept that rhetoric is something like a social process by which contingent truths are negotiated through symbolic interaction, and we expand the definition of social to include human and non-human actors, deep learning algorithms would seem to throw a monkey wrench into the rhetorical machinery given their reticence. Part of this no doubt is attuning ourselves to these kinds of objects. As Ehren Pflugfelder writes, a distributed theory of agency gives, “rhetoricians the freedom to consider a wider range of observable forces in their studies.”

But part of this may also include sharing our understanding of rhetoric with machines rather than accepting their rhetorical incursions as necessarily static. This suggests that for a deep learning application to be ethical, it must also be rhetorical. In other words, we should participate in the design of deep learning systems that engage in meaningful conversations about their choices, understood as moments of distributed agency within networks of human and non-human actors.

As rhetoricians, we need to be asking both how we can more completely account for deep learning and how we can make deep learning more completely accountable. Of course, this raises deeper questions concerning our own natural intelligence, as it were. More specifically, it calls into question the extent to which our own intelligence is scrutable. Alexander Reid, for one, has explored technology, environment, and distributed cognition, demonstrating how we mis/understand the experience of our own consciousness. That being said, rhetoric has long grappled with the nature of interiority and reality and the provisional and probabilistic qualities of social reasoning, and as such offers an important way to assess artificial intelligence. If we approach artificial intelligence as rhetorical actor, we can reconceptualize the design and evaluation of these systems.